Microsoft has proven off its newest analysis in text-to-speech AI with a mannequin known as VALL-E that can simulate somebody’s voice from simply a three-second audio sample, Ars Technica has reported. The speech can not solely match the timbre but in addition the emotional tone of the speaker, and even the acoustics of a room. It might someday be used for personalized or high-end text-to-speech functions, although like deepfakes, it carries dangers of misuse.

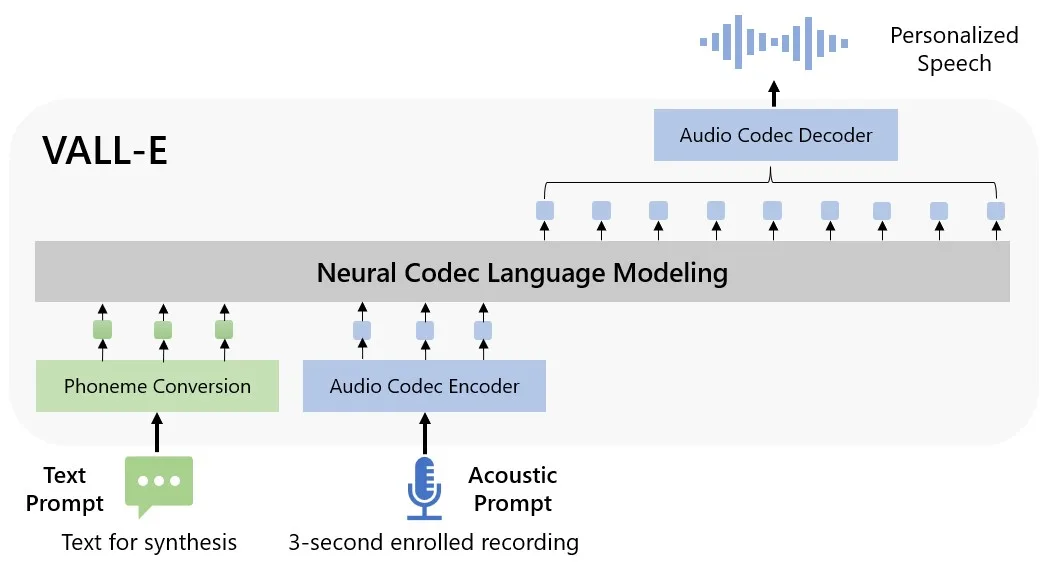

VALL-E is what Microsoft calls a “neural codec language model.” It’s derived from Meta’s AI-powered compression neural web Encodec, producing audio from textual content enter and short samples from the goal speaker.

In a paper, researchers describe how they skilled VALL-E on 60,000 hours of English language speech from 7,000-plus audio system on Meta’s LibriLight audio library. The voice it makes an attempt to mimic have to be a shut match to a voice within the coaching knowledge. If that is the case, it makes use of the coaching knowledge to deduce what the goal speaker would sound like if talking the specified textual content enter.

Microsoft

The crew exhibits precisely how properly this works on the VALL-E Github web page. For every phrase they need the AI to “speak,” they’ve a three-second immediate from the speaker to mimic, a “ground truth” of the identical speaker saying one other phrase for comparability, a “baseline” typical text-to-speech synthesis and the VALL-E sample on the finish.

The outcomes are blended, with some sounding machine-like and others being surprisingly lifelike. The incontrovertible fact that it retains the emotional tone of the unique samples is what sells those that work. It additionally faithfully matches the acoustic surroundings, so if the speaker recorded their voice in an echo-y corridor, the VALL-E output additionally sounds prefer it got here from the identical place.

To enhance the mannequin, Microsoft plans to scale up its coaching knowledge “to improve the model performance across prosody, speaking style, and speaker similarity perspectives.” It’s additionally exploring methods to cut back phrases which are unclear or missed.

Microsoft elected to not make the code open supply, presumably as a result of dangers inherent with AI that can put phrases in somebody’s mouth. It added that it might comply with its “Microsoft AI Principals” on any additional improvement. “Since VALL-E could synthesize speech that maintains speaker identity, it may carry potential risks in misuse of the model, such as spoofing voice identification or impersonating,” the corporate wrote within the “Broader impacts” part of its conclusion.

All merchandise really helpful by Engadget are chosen by our editorial crew, impartial of our mum or dad firm. Some of our tales embody affiliate hyperlinks. If you purchase one thing via certainly one of these hyperlinks, we could earn an affiliate fee. All costs are appropriate on the time of publishing.

…. to be continued

Read the Original Article

Copyright for syndicated content material belongs to the linked Source : Engadget – https://www.engadget.com/microsofts-vall-e-ai-can-simulate-any-persons-voice-from-a-short-audio-sample-112520213.html?src=rss